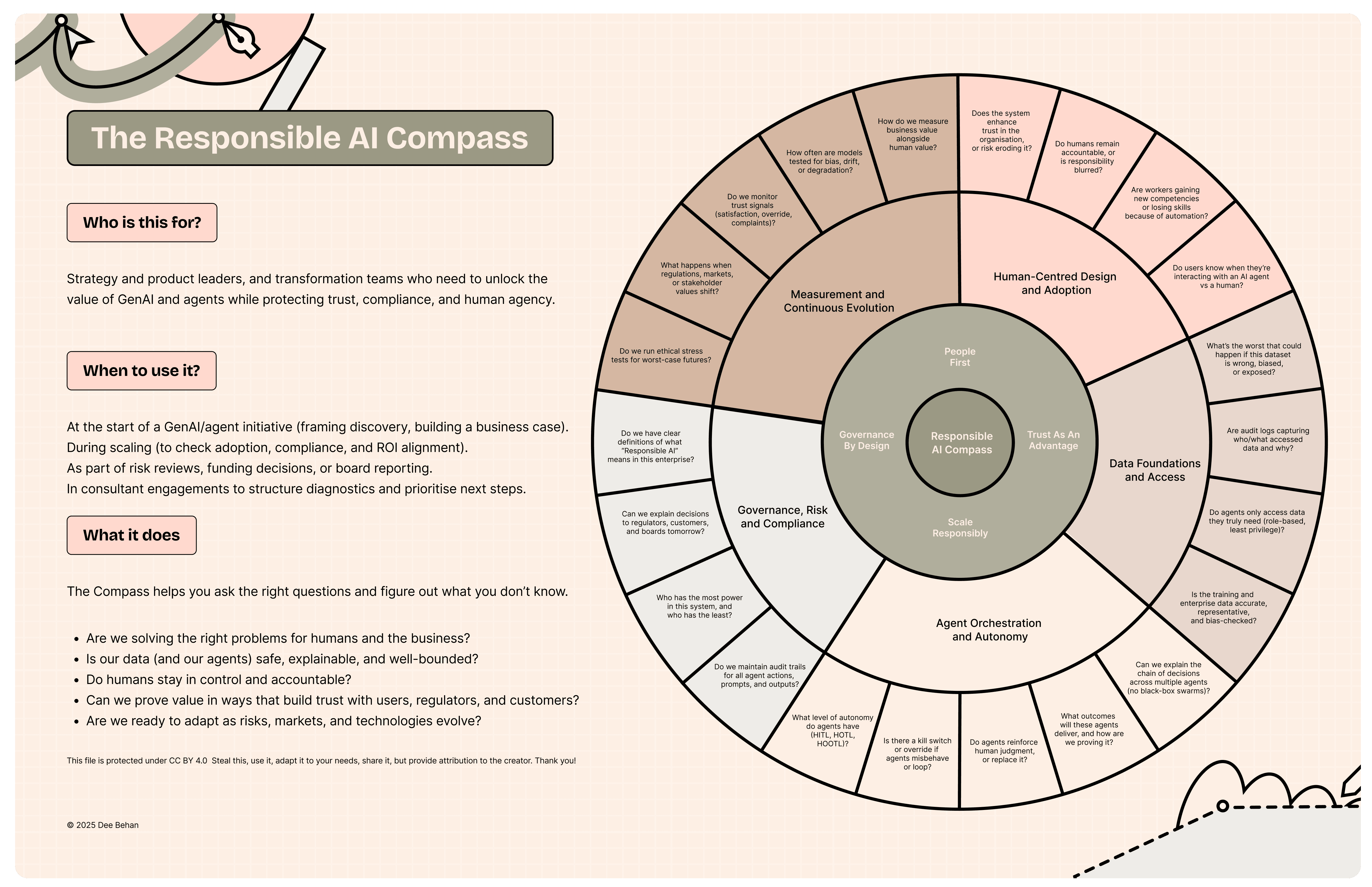

Responsible ai compass

“Governance and trust.”

Two words I hear in nearly every AI conversation. In boardrooms. In pitch decks. In compliance policies. They’ve become shorthand for doing AI “responsibly.”

But history tells us that guardrails alone don’t guarantee safety, especially when we don’t understand the road we’re on.

The Boeing 737 MAX lesson

In 2018 and 2019, two Boeing 737 MAX planes crashed, killing 346 people. The culprit wasn’t the engines or the airframe, but software: an automated system called MCAS that overrode pilot input based on faulty sensor data.

On paper, Boeing had governance: safety processes, certifications, regulators. But governance didn’t save those lives. The deeper failure was that pilots weren’t fully informed, weren’t trained on the system, and didn’t have a clear way to take back control.

In other words: the design prioritised compliance over human-centred resilience.

Why this matters for AI

That’s an extreme example but today, as enterprises race to scale GenAI and autonomous agents, I am seeing gaps: governance frameworks without the bigger strategy: system (the how) and leadership (the who and why).

The real questions leaders need to ask are:

Are we solving the right human and business problems?

Is the data our AI relies on safe, explainable, and well-bounded?

Do humans stay in control and accountable?

What value are these systems actually creating, and how will we prove it?

That’s why I created the Responsible AI Compass.

It’s not another policy checklist. It’s a way to step back, interrogate the whole AI strategy, and surface blind spots before they become failures.

Because governance is part of the answer. But trust, adoption, and value? That’s the whole story.

Learn more

Go deeper, the Compass connects to established standards and frameworks:

👉 Australian AI Ethics Principles (2019) – Australia’s baseline for responsible AI.

👉 National AI Centre (CSIRO/Data61) – Practical guides and maturity tools for Australian organisations.

👉 ISO/IEC 42001 (2023) – The first certifiable AI Management System Standard, soon to be adopted locally.

👉 NIST AI Risk Management Framework (2023) – A practical approach to managing AI risk, widely used by enterprises.

👉 OECD AI Principles (2019) – Global principles for trustworthy, human-centred AI (Australia is a signatory).

👉 EU AI Act (2024) – Not Australian law, but shaping global practice and compliance expectations.